The post Pixel 7 Pro Actually Challenges My $10,000 DSLR Camera Setup first appeared on Joggingvideo.com.

]]>

Google got my attention by bragging about the Pixel 7 Pro phone‘s “pro-level zoom” and asserting that the smartphone’s photography features can challenge traditional cameras. I’m one of those serious photographers who lugs around a heavy camera and a bunch of bulky lenses. But I also love phone photography, so I decided to test Google’s claims.

At its October launch event, Google touted the Pixel 7 Pro’s telephoto zoom for magnifying distant subjects, its Tensor G2-powered AI processing, its faster Night Sight for low-light scenes and a new macro ability for closeup photos. “It cleverly combines state-of-the-art hardware, software and machine learning to create amazing zoom photos across any magnification,” Pixel camera hardware chief Alexander Schiffhauer said at the phone’s launch event. Google wants you to think of this phone as offering a continuous zoom range from ultrawide angle to supertelephoto.

As you might imagine, I got better results from my “real” camera equipment, which would cost $10,000 if purchased new today. Even though my Canon 5D Mark IV is now 6 years old, it’s hard to beat a big image sensor and big lenses when it comes to color, sharpness, detail and a wide dynamic range spanning bright and dark tones.

But the Pixel 7 Pro’s photographic flexibility challenges my camera setup better than any other phone I’ve used, even outperforming my DSLR in some circumstances and earning a “stellar” rating from CNET editor Andrew Lanxon. While my camera and four lenses fill a whole backpack, Google’s smartphone fits in my pocket. And of course that $900 smartphone lets me share a selfie, check my email, pay for the groceries and tackle the daily crossword puzzle.

Google Pixel 7 Pro – From $899

Google’s 2022 flagship model includes some of the best camera and imaging features you’ll find on a smartphone right now.

$899 at Google Store

With the steady annual improvement in smartphone camera hardware and image processing, a smartphone isn’t just a better-than-nothing camera. These little slices of electronics are increasingly able to nail important shots and open up new creative possibilities for those who are discovering the rewards of photography.

I’ll keep hauling my DSLR on hikes and family outings. But because I won’t always have it with me, the Pixel 7 Pro — in particular its zoom and low-light abilities — means I won’t be as worried about missing the shot when I don’t.

My Canon 5D Mark IV, which costs $2,700 new these days, most often has the $1,900 Canon EF 24-70mm f/2.8L II USM lens mounted. I also use the $2,400 EF 100-400mm f/4.5-5.6L IS II USM for telephoto shots, the $1,300 ultrawide EF 16-35mm f/4L IS USM zoom, the $1,300 EF 100mm f/2.8L Macro IS USM for closeups, and the $429 Extender EF 1.4X III for more telephoto reach when photographing birds. Here’s how that gear stacks up against the Pixel 7 Pro’s 0.5x ultrawide, 1x main camera and 5x telephoto camera.

Now playing:

Watch this:

Pixel 7 Pro Review: Google’s Best Phone Gets Better

10:14

Google Pixel 7 Pro vs. Canon 5D Mark IV, main camera

With plenty of light, the Pixel 7 Pro’s 24mm main camera does a good job capturing color and detail in its 12-megapixel images. Check the comparisons here (and note that my DSLR shoots in a more elongated 3:2 aspect ratio than the Pixel 7 Pro’s 4:3).

Pixel peeping shows the phone can’t hold a candle to my 30-megapixel DSLR when it comes to detail. If you’re printing posters or need a lot of detail for photo editing, a modern DSLR or mirrorless camera is worth it. But 12 megapixels is plenty for most purposes. Check the below cropped images to see what’s going on up close.

Google missed a chance to shoot even higher resolution photos than my 30-megapixel DSLR, though. The Pixel 7 Pro’s main camera has a 50-megapixel sensor. It takes 12-megapixel photos using an approach called pixel binning that combines each 2×2 pixel group on the sensor into one effectively larger pixel. That means better color and low-light performance when shooting at 24mm. But you can use those 50 megapixels differently by skipping the pixel binning and shooting in the sensor’s full resolution when there’s sufficient light. That’s exactly what Apple does with the iPhone 14 Pro camera, and I wish Google did the same.

Pixel 7 Pro vs. DSLR, people and pets

The Pixel 7 Pro was capable at portrait photography. I prefer shooting raw and editing the shots myself because I sometimes find the Pixel 7 Pro makes faces look a little too processed, and I find its color balance a bit cool for my tastes. With the main camera, the Pixel 7 Pro does a pretty good job finding faces, tracking them and staying focused. For 2022, the Pixel 7 Pro now can find individual eyes, the ideal focus point of a camera and a weak point on my older DSLR.

On this comparison, I find the DSLR did a better job with skin tones, but the Pixel 7 Pro capably exposed the face in tricky lighting.

Using the Pixel 7 Pro’s portrait mode, which artificially blurs photo backgrounds, I find the processing artifacts distracting, especially with flyaway hair, though that’s not a problem with the example below. The shot is workable for quick sharing and looks fine on smaller screens, but I wouldn’t make a print of it. For the DSLR shot, I used my Sigma 35mm f1.4 lens, shooting wide open at f1.4 for the smoothest possible background blur. It’s much better than the Pixel 7 Pro, though its shallow depth of field blurs the hands and plastic toys.

For pets, the Pixel 7 Pro again did a great job finding and focusing on eyes. Here’s my dog, up close. The main camera at 1x zoom, or 24mm, isn’t ideal for single subjects, though, and the camera’s performance at 2x isn’t as strong, so bear that in mind.

To see how much more detail my SLR can capture — as long as I get focus right — check the cropped views below. And note that new mirrorless cameras from Sony, Nikon and Canon do a good job with eye tracking for easier focus.

DSLR vs. Pixel 7 Pro, telephoto cameras

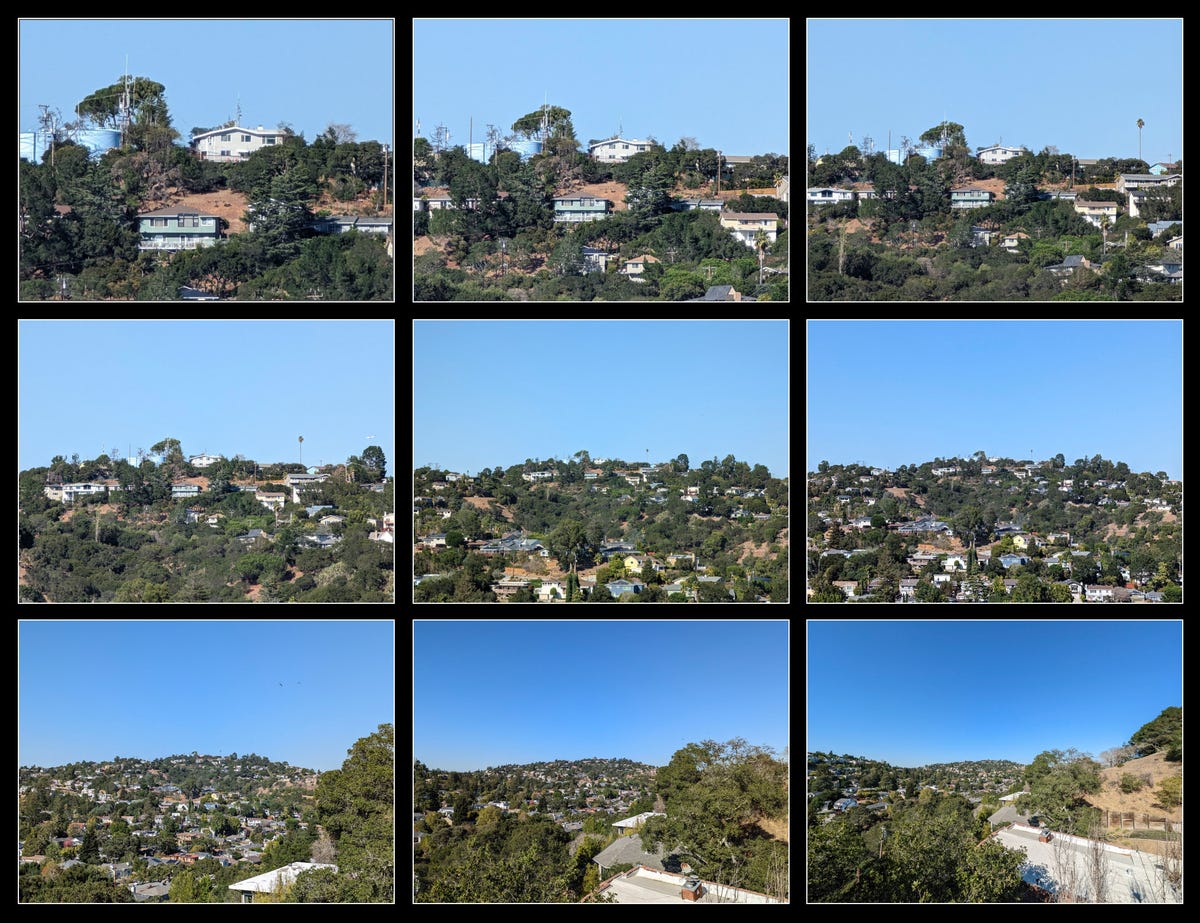

Telephoto lenses magnify more distant subjects, and the Pixel 7 Pro has a remarkable range for a smartphone. Its sensors can shoot at 2x, 5x and 10x zoom modes with minimal processing trickery. It’ll shoot at intermediate settings with various combinations of cropping and multi-camera image compositing that I find fairly convincing. Then it reaches up to 30x with Google’s AI-infused upscaling technology, called Super Res Zoom. Here’s the same scene shot across the Pixel 7 Pro’s full range from supertelephoto 30x to ultrawide 0.5x:

Enlarge Image

Enlarge ImageThe Pixel 7 Pro’s zoom range reaches from 0.5x to 10x shooting at the native resolution of its three cameras, then extends to 30x with Google’s image processing technology. That’s an equivalent of 12mm to 720mm in conventional full-frame camera terms.

Stephen Shankland/CNET

The image quality is pretty bad by the time you reach 30x zoom, an equivalent of 720mm. But even my expensive DSLR gear only reaches 560mm maximum, and venturing beyond 10x on the Pixel 7 Pro can be justified in many circumstances. Not every photo has to be good enough quality to make an 8×10 print.

Bigger telephoto photography

Telephoto lenses are big, which is why those pro photographers at NFL games haul around monopods to support their hulking optics. Canon’s RF 400mm f/2.8 L IS USM lens, popular on the sidelines, weighs more than six pounds, measures more than 14 inches long, and costs more than my entire collection of cameras and lenses. My Canon 100-400mm zoom is smaller and cheaper but doesn’t let in as much light, but it’s still gargantuan compared with the Pixel 7 Pro. I’m delighted to be able to capture useful telephoto shots on a Pixel phone, an option that previously was available only on rival Android phones from Samsung and others.

Google exploits the Pixel 7 Pro’s 50-megapixel main camera sensor for the first step up the telephoto lens ladder, a 2x zoom level good for portraits. The Pixel 7 Pro uses just the central 12 megapixels to capture a 12-megapixel photo in 2x telephoto mode, an equivalent focal length of 48mm.

The dedicated telephoto camera kicks in at 5x zoom, an equivalent of 120mm. Instead of a bulky telephoto protuberance, Google uses a prism to bend light 90 degrees so the necessary lens length and 48-megapixel image sensor can be tucked sideways within the Pixel 7 Pro’s thicker “camera bar” section. It also can use the central megapixels in its 10x mode, or 240mm, an option I think is terrific. This San Francisco architectural sight below is pretty good:

Using AI and software processing to zoom further, the camera can reach 20x and even 30x zoom, which translates to 480mm and 720mm. By comparison, my DSLR reaches 560mm with my 1.4x telephoto extender.

My DSLR would have trounced the Pixel 7 Pro for this scene of Bay Area fog lapping up against the Santa Cruz Mountains south of San Francisco, shot somewhere between 15x and 20x. (I wish Google would write zoom level metadata into photos the way my Canon records lens focal length settings.) But guess what? I was mountain biking and didn’t take my DSLR. The best camera is the one you have, as the saying goes.

Enlarge Image

Enlarge ImageSan Francisco Bay Area fog lapping up against the Santa Cruz Mountains, photographed here at about 20x zoom with the Pixel 7 Pro, is a useful if flawed photo.

Stephen Shankland/CNET

Back at 10x zoom, I was pleased with this shot below of my pal Joe mountain biking. I’ve photographed people in this very spot before with smartphones, and this was the first time I wasn’t frustrated with the results.

Enlarge Image

Enlarge ImageA Pixel 7 Pro photo of a mountain biker taken at 10x zoom

Stephen Shankland/CNET

Google’s optics and image processing methods are clever but not magical. The Pixel 7 Pro produces a 12-megapixel image, but the farther beyond 10x you shoot, the more you’ll cringe at its blotchy details that look more like a watercolor painting. That’s the glass-is-half-empty view. I’m actually on the glass-is-half-full side, appreciating what you can do and recognizing that a lot of photos will be viewed on smaller screens. Image quality of 10x is respectable, and that alone is a major achievement.

Here’s a comparison of a rooftop party photographed with the Pixel 7 Pro at 30x, or 720mm equivalent, and my camera at 560mm, but cropped in to match the phone’s framing. The DSLR does better, of course. Even cropped, it’s an 18-megapixel image.

Practical limits on Pixel 7 Pro’s telephoto cameras

To really exercise the phone, I toted it to see the US Navy’s Blue Angels flight display over San Francisco. Buildings and fog blocking my view made photography tough, but I found new limitations to the Pixel 7 Pro.

Fiddling with screen controls to hit 10x or more zoom is slow. Framing fast-moving subjects on a smartphone screen is hard, even with the aid of the miniature wider-angle view that Google pops into the scene and its AI-assisted stabilization technology. Focus is also relatively pokey. With my DSLR, I could rapidly find the jets in the sky, lock focus, track them as they flew and shoot a burst of shots.

I didn’t get a single good photo of the Blue Angels with the Pixel 7 Pro. Google’s “pro-level zoom” works much better with stationary subjects.

DSLR vs. Pixel 7 Pro, shooting in the dark

Here’s where the Pixel 7 Pro beats out a vastly more expensive camera. There’s no way you can hold a camera steady for 6 seconds, but Pixel phones in effect can thanks to computational photography techniques that Google pioneered. Google takes a collection of photos, using AI to judge when your hands are most still, then combines these individual frames into one shot. It’s the basis of its Night Sight feature, which I’ve used many times and, at its extreme, powers an astrophotography mode I’ve used to take 4-minute exposures of the night sky.

Below is a comparison of a nighttime scene with the Pixel 7 Pro at 1x, where it’s best at gathering light, and my DSLR with its 24-70mm f2.8 lens. The DSLR has more detail up close, but the Pixel 7 Pro does well, and its deeper depth of field means the leaves in the foreground aren’t a smeary mess.

Here’s a comparison of a 2x zoom photo with the Pixel 7 Pro and the best I could do handheld with my 24-70mm f2.8 lens. The longer your zoom, the harder it is to hold a camera steady, and even with my elbows on a railing to steady the camera, the Pixel 7 Pro shot was vastly easier to capture. I had to crank my DSLR’s sensitivity to ISO 12,800 to get the shutter speed down to 1/8sec, and even then, most of the photos were duds. Image stabilization helps, but this lens doesn’t have it.

Just for kicks, I used a tripod to take three exposure-bracketed shots with my DSLR and merged them into a single HDR (high dynamic range) photo in Adobe’s Lightroom software. The longest exposure was 30 seconds. That’s how much effort it took to beat a Night Sight photo I took just standing there holding the phone for 6 seconds. Check the comparison below.

Parked cars

Here’s where my DSLR completely trounced the Pixel 7 Pro, even with Night Sight, though: the nearly full moon. Here’s the Pixel 7 Pro at 30x zoom vs. my DSLR at 560mm, cropped so the framing matches.

DSLR vs. Pixel 7 Pro, dynamic range

One of the best measures of a camera is dynamic range, the span between dark and light it can capture in a single scene. To exercise the Pixel 7 Pro here, I shot in raw format, which allows for more editing flexibility. Then I edited the photos, cranking the exposure up 4 stops to reveal noise problems in shadowed areas and then down 4 stops to see how well it captured detail in bright areas.

In short, I’m impressed. Google squeezes a remarkable amount of data out of its relatively small sensor with its processing methods.

Two techniques are relevant. With Google’s HDR+ system, the Pixel 7 Pro combines multiple underexposed frames and one regularly exposed frame to record shadow detail without blowing out highlights in bright areas. And Google includes this data in a “computational raw” format that packages that detail in Adobe’s very flexible DNG format. It’s not truly raw, like the single frame of data pulled from my DSLR’s image sensor is, but it’s an excellent option for smartphone photography.

Below is a cropped photo with the Pixel 7 Pro’s 1x camera, underexposed by 4 stops to see if was able to record a range of tones even in the very bright pampas grass plumes. It was.

Shooting at 2x, which uses only the central pixels on the 1x camera, poses more of a challenge when going up against my DSLR, which suffers no such degradation in hardware abilities when I zoom in. Overexposed by 4 stops, you can see a lot more noise and color problems with the Pixel 7 Pro in the comparison below. But overall, it’s got impressive dynamic range on the main camera.

DSLR vs. Pixel 7 Pro, ultrawide

Google made the ultrawide lens on the Pixel 7 Pro an even wider field of view compared with last year. What you like is a matter of personal preference, but I appreciate the dramatic perspective that you can capture with a very wide angle. When I don’t need it, the 24mm main camera still qualifies as wide angle.

Here’s a comparison of a scene shot with the Pixel 7 Pro and my DSLR’s 16-35mm ultrawide zoom.

DSLR vs. PIxel 7 Pro, macro

The new ultrawide camera now has autofocus hardware, and that opens up the world of macro photography for close-up subjects. Apple’s iPhone Pro models got this ability in 2021, and I’ve loved macro photos for years as a way to shoot flowers, mushrooms, toys and other small subjects, so I’m delighted to see it on the higher-end Pixel phones.

As with the iPhone, though, the macro is useful as long as the subject fits in the central portion of the frame. Note in this comparison below how blurred the image gets toward the periphery of this butterfly coaster with the Pixel 7 Pro.

No, it’s not as good as my DSLR. But with macro abilities, Night Sight and a zoom range from ultrawide to super telephoto, the Pixel 7 Pro is more than just useful for snapshots. It lets you start exploring a much bigger part of photography’s creative realm.

Canon 5D Mark IV

$2,699 at Amazon

Pixel 7 Pro: Subtle Tweaks Improve Google’s Best Phone

+16 more

The post Pixel 7 Pro Actually Challenges My $10,000 DSLR Camera Setup first appeared on Joggingvideo.com.

]]>The post Quantum Computing’s Impact Could Come Sooner Than You Think first appeared on Joggingvideo.com.

]]>

In 2013, Rigetti Computing began its push to make quantum computers. That effort could bear serious fruit starting in 2023, the company said Friday.

That’s because next year, the Berkeley, California-based company plans to deliver both its fourth-generation machine, called Ankaa, and an expanded model called Lyra. The company hopes those machines will usher in “quantum advantage,” when the radically different machines mature into devices that actually deliver results out of the reach of conventional computers, said Rigetti founder and Chief Executive Chad Rigetti.

Quantum computers rely on the weird physics of ultrasmall elements like atoms and photons to perform calculations that are impractical on the conventional computer processors that power smartphones, laptops and data centers. Advocates hope quantum computers will lead to more powerful vehicle batteries, new drugs, more efficient package delivery, more effective artificial intelligence and other breakthroughs.

So far, quantum computers are very expensive research projects. Rigetti is among a large group scrambling to be the first to quantum advantage, though. That includes tech giants like IBM, Google, Baidu and Intel and specialists like Quantinuum, IonQ, PsiQuantum, Pasqal and Silicon Quantum Computing.

“This is the new space race,” Rigetti said in an exclusive interview ahead of the company’s first investor day.

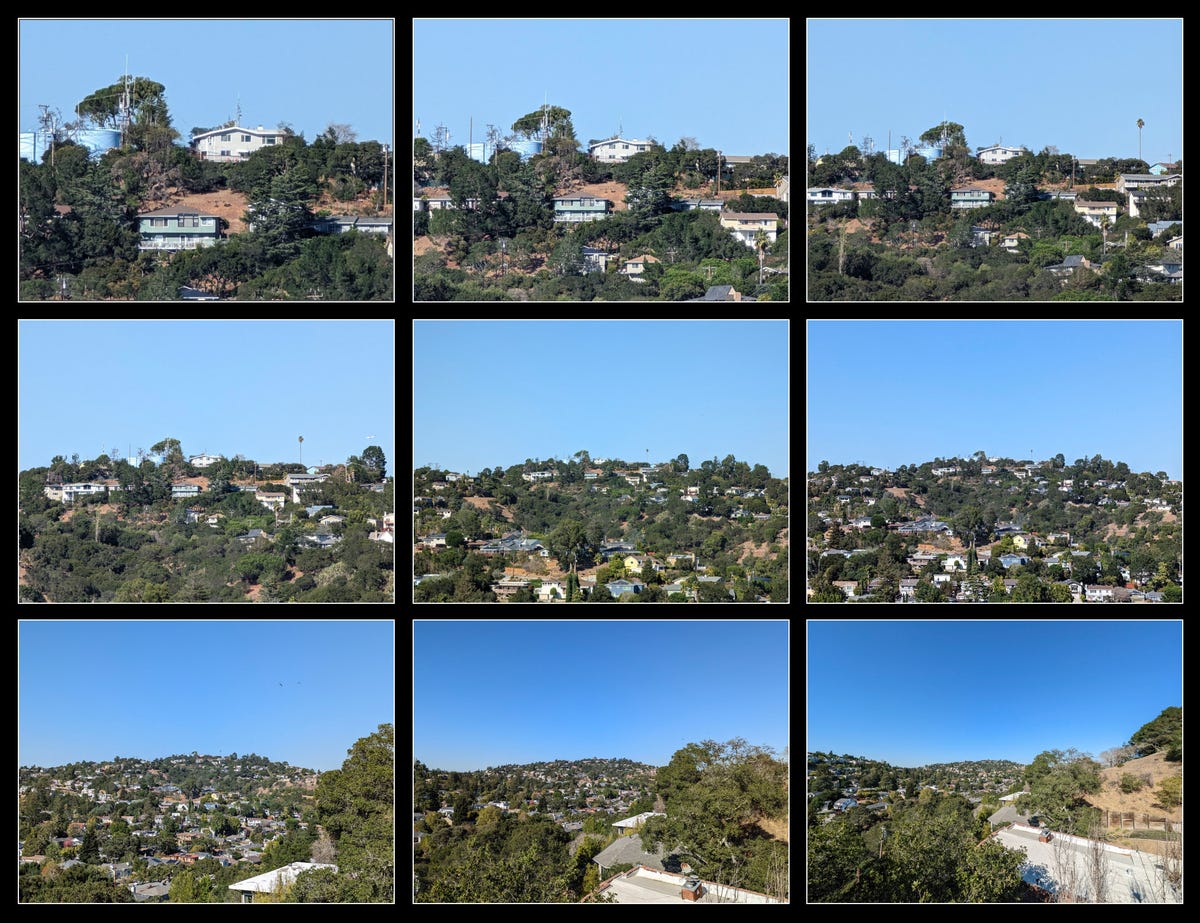

For the event, the company is revealing more details about its full technology array, including manufacturing, hardware, the applications its computers will run and the cloud services to reach customers. “We’re building the full rocket,” Rigetti said.

Although Rigetti isn’t a household name, it holds weight in this world. In February, Rigetti raised $262 million and became one of a small number of publicly traded quantum computing companies. Although the company has been clear its quantum computing business is a long-term plan, investors have become more skeptical. Its stock price has dropped by about three quarters since going public, hurt most recently when Rigetti announced the delay of a $4 million US government contract that would have accounted for much of the company’s annual revenue of about $12 million to $13 million.

Quantum computers with more qubits

The company argues it’s got the right approach for the long run, though. It starts in early 2023 with Ankaa, a processor that includes 84 qubits, the fundamental data processing element in a quantum computer. Four of those ganged together are the foundation for Lyra, a 336-qubit machine. The names are astronomical: Ankaa is a star, and Lyra is a constellation.

Rigetti doesn’t promise quantum advantage from the 336 qubit machine, but it’s the company’s hope. “We believe it’s absolutely within the realm of possibility,” Rigetti said.

Having more qubits is crucial to more sophisticated algorithms needed for quantum advantage. Rigetti hopes customers in the finance, automotive and government sectors will be eager to pay for that quantum computing horsepower. Auto companies could research new battery technologies and optimize their complex manufacturing operations, and financial services companies are always looking for better ways to spot trends and make trading decisions, Rigetti said.

Rigetti plans to link its Ankaa modules into larger machines: a 1,000-qubit computer in 2025 and a 4,000-qubit model in 2027.

Rigetti isn’t the only company trying to build a rocket, though. IBM has a 127-qubit quantum computer today, with plans for a 433-qubit model in 2023 and more than 4,000 qubits in 2025. Although qubit count is only one measure of a quantum computer’s utility, it’s an important factor.

“What Rigetti is doing in terms of qubits pales in comparison to IBM,” said Moor Insights & Strategy analsyt Paul Smith-Goodson.

Rigetti’s quantum computing deals

Along with those machines, Rigetti expects developments in manufacturing, including a 5,000-square-foot expansion of the company’s Fremont, California, chip fabrication facility now underway, improvements in the error correction technology necessary to perform more than the most fleeting quantum computing calculations, and better software and services so customers can actually use its machines.

Rigetti Computing’s plans for improvements to its broad suite of quantum computing technology.

Rigetti Computing

To reach its goals, Rigetti also announced four new deals at its investor event:

- Graphics and AI chip giant Nvidia has begun a partnership to marry quantum and conventional computing to improve climate modeling

- Microsoft’s Azure cloud computing service will offer access to Rigetti machines

- Bluefors will build new refrigerators to accommodate the 1,000- and 4,000-qubit systems, a key technology partnership since its machines must be cooled nearly to absolute zero

- Keysight Technologies will offer its technology for reducing quantum computing error rates, a critical step in running more complex calculations

Qubits are easily perturbed, so coping with errors is critical to quantum computing progress. So is a better foundation less prone to errors. Quantum computer makers track that with a measurement called gate fidelity. Rigetti is at 95% to 97% fidelity today, but prototypes for its fourth-generation Ankaa-based systems have shown 99%, Rigetti said.

In the eyes of analyst Smith-Goodson, quantum computing will become useful eventually, but there’s plenty of uncertainty about how and when we’ll get there.

“Everybody is working toward a million qubit machine,” he said. “We’re not sure which technology is really going to be the one that is going to actually make it.”

The post Quantum Computing’s Impact Could Come Sooner Than You Think first appeared on Joggingvideo.com.

]]>The post Ditch Your iPhone Password. Apple’s New iOS 16 Feature Is More Secure first appeared on Joggingvideo.com.

]]>This story is part of Focal Point iPhone 2022, CNET’s collection of news, tips and advice around Apple’s most popular product.

What’s happening

Apple’s new iPhone 14 models will come technology called passkeys designed to be as easy to use as passwords but much more. That comes with iOS 16, but Google is building passkeys into its phone and browser software, too.

Why it matters

Passwords have long been plagued with problems, but starting with iPhones, tech giants have cooperated to design a practical alternative that reduces vulnerabilities and hacking risks.

After Apple released OS 16 on Monday and started delivering iPhone 14 smartphones on Friday, you now can try out passkeys, a new login technology that promises to be more secure than passwords at guarding access to websites, email and other online services.

Apple demonstrated passkeys at its Worldwide Developers Conference in June and had said they’d come to iOS 16 and MacOS Ventura this fall. They’re coming to Google’s Android and to web browsers later this year, too.

Passkeys are as easy to use as passwords, and maybe easier. They replace the riot of keystrokes needed for passwords with a biometric check on our phones or computers. They also stop phishing attacks and banish the complications of two-factor authentication, like SMS codes, that are tied to the password system’s weaknesses.

Once you set up a passkey for a site or app, it’s stored on the phone or personal computer you used to set it up. Services like Apple’s iCloud Keychain or Google’s Chrome password manager can synchronize passkeys across your devices. Dozens of tech companies developed the open standards behind passkeys in a group called the FIDO Alliance, which announced passkeys in May.

“Now is the time to adopt them,” Garrett Davidson, an authentication technology engineer at Apple, said in a WWDC talk about passkeys. “With passkeys, not only is the user experience better than with passwords, but entire categories of security — like weak and reused credentials, credential leaks, and phishing — are just not possible anymore.”

You’ll have to spend a little time on the learning curve before passkeys meet their potential. You’ll also have to decide whether Apple, Microsoft or Google is the best option for you.

Here’s a look at the technology.

What’s a passkey?

It’s a new type of login credential consisting of a little bit of digital data your PC or phone uses when logging onto a server. You approve each use of that data with an authentication step, such as fingerprint check, face recognition, a PIN code or the login swipe pattern familiar to Android phone owners.

Here’s the catch: You’ll have to have your phone or computer with you to use passkeys. You can’t log onto a passkey-secured account from a friend’s computer without a device of your own.

Passkeys are synchronized and backed up. If you get a new Android phone or iPhone, Google and Apple can restore your passkeys. With end-to-end encryption, Google and Apple can’t see or alter the passkeys. Apple has designed its system to keep passkeys secure even if an attacker or Apple employee compromises your iCloud account.

How does setting up a passkey work?

It’s pretty simple. Use your fingerprint, face or another mechanism to authenticate a passkey when a website or app prompts you to set one up. That’s it.

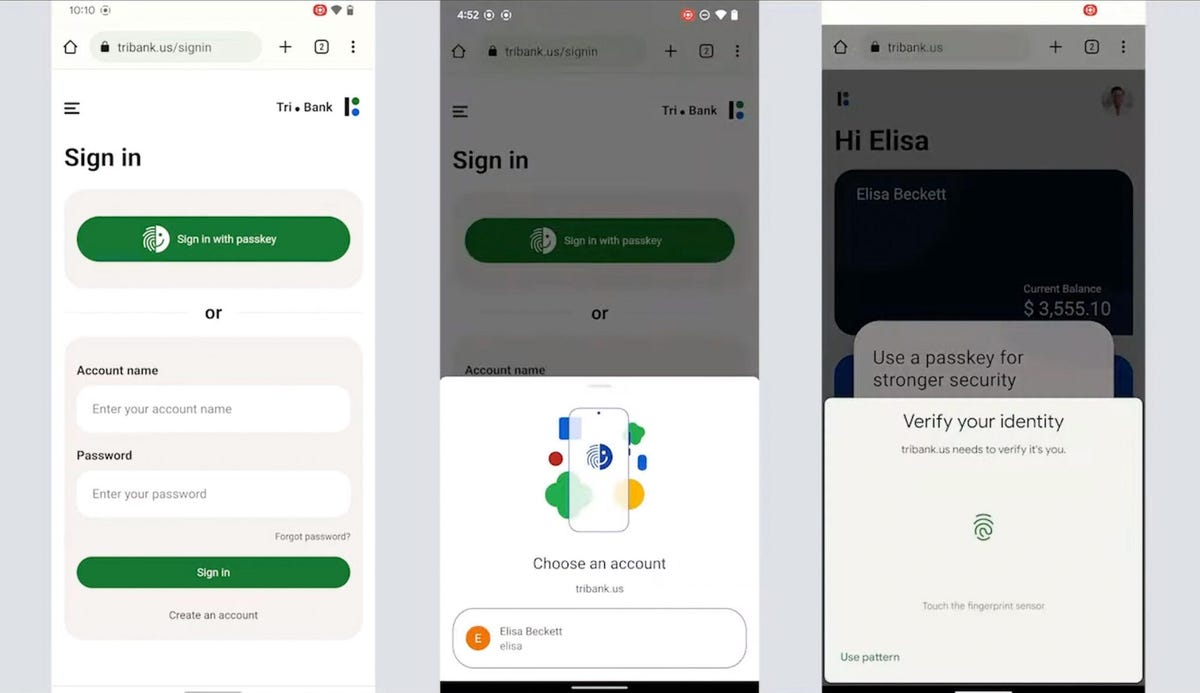

These steps show how to log on with passkeys on an Android phone: choose the passkey option, choose the appropriate passkey, and authenticate with a fingerprint ID. Face recognition also is an option on compatible phones.

How do I use a passkey to log in?

When using a phone, a passkey authentication option will appear when you try to log on to an app. Tap that option, use the authentication technique you’ve chosen, and you’re in.

For websites, you should see a passkey option by the username field. After that, the process is the same.

Once you have a passkey on your phone, you can use it to facilitate a login on another nearby device, like your laptop. Once you’re logged in, that website can offer to create a new passkey linked to the new device.

What if I need to log in to a website while using someone else’s computer?

You can use a passkey stored on your phone to log onto another nearby device, like a laptop you’re borrowing. The login screen on the borrowed laptop will have an option to present a QR code you can scan with your phone. You’ll use Bluetooth to ensure your phone and the computer are close by, then let you use a fingerprint or face ID check on your own phone. Your phone then will communicate with the computer over a secure connection to complete the authentication process.

Why are passkeys more secure than passwords?

Passkeys employ a time-tested security foundation called public key cryptography for login operation. That’s the same technology that protects your credit card number when you type it into a website. The beauty of the system is that a website only has to base its passkey record on your public key, data that’s designed to be openly visible. The private key used to set up a passkey is stored only on your own device. There’s no database of password data that a hacker can steal.

Dumping passwords

- Apple Is Trying to Kill Passwords With Touch ID- and Face ID-Based Passkeys

- Best Password Manager to Use for 2022

- Dumping Passwords Can Improve Your Security – Really

- Microsoft Now Lets You Log Into Outlook, Skype, Xbox Live Without a Password

Another big benefit is that passkeys block phishing attempts. “Passkeys are intrinsically linked to the website or app they were set up for, so users can never be tricked into using their passkey on the wrong website,” Ricky Mondello, who oversees authentication technology at Apple, said in a WWDC video.

Using passkeys requires that you have your device handy and be able to unlock it, a combination that offers the protection of two-factor authentication but with less bother than SMS codes. And with passkeys, nobody can snoop over your shoulder to watch you type your password.

When will I see passkeys?

Passkeys have begun emerging this year.

Passkeys are in iOS 16 now and will arrive in iPadOS 16 and MacOS Ventura when Apple releases that software later this fall. Google will bring passkey support to Android software by the end of 2022 for developer testing, Google authentication leader Mark Risher said in May. Passkey support should arrive in Chrome and Chrome OS at the same time. Microsoft plans support in Windows in 2022.

That’s just enabling technology, though. Websites and apps also must be updated to support passkeys. Some developers will be eager to take advantage of the security benefits, but many will move more slowly. Even if passkeys catch on fast, don’t expect passwords to disappear.

One company that’s already added passkey support, travel booking service Kayak, added passkey support to its app and website this week. Expect to see lots more gradually adopt it.

Will websites and apps require me to use passkeys?

It’s unlikely you’ll be forced to use passkeys while the technology is new and unfamiliar. Websites and apps you already use will likely add passkey support alongside existing password methods.

If you need to log into a friend’s computer that doesn’t have your passkey, scanning a QR code will let your phone handle the authentication process.

Apple

When you sign up for a new service, passkeys may be presented as the preferred option. Eventually, they may become the only option.

Will passkeys lock me into Apple or Google ecosystems?

Not exactly. Although passkeys are anchored to one company’s technology suite, you’ll be able to bridge out of, say, Apple’s world to use passkeys with Microsoft’s or Google’s.

“Users can sign in on a Google Chrome browser that’s running on Microsoft Windows, using a passkey on an Apple device,” Vasu Jakkal, a Microsoft leader of security and identity technology, said in a May blog post.

Passkey advocates also are working on technology to let people migrate their passkeys from one tech domain to another, Apple and Google said.

How are password managers involved with passkeys?

Password managers play an increasingly important role in generating, storing and synchronizing passwords. But passkeys will likely be anchored to your phone or personal computer, not your password manager, at least in the eyes of tech giants like Google and Apple.

That could change, though.

“We expect a natural evolution to an architecture that allows third-party passkey managers to plug in, and for portability among ecosystems,” Google’s Risher said.

He anticipates that passkeys will evolve to lower barriers between ecosystems and to accommodate third-party passkey managers. “This has been a discussion point since early in this industry push.”

Indeed, password manager Dashlane is testing passkey support and plans to release it broadly in coming weeks. “Users can store their passkeys for multiple sites and benefit from the same convenience and security they already have with their passwords,” the company said in an Aug. 31 blog post.

1Password maker AgileBits just joined the FIDO Alliance, and DashLane, Bitwarden and LastPass already are members.

The post Ditch Your iPhone Password. Apple’s New iOS 16 Feature Is More Secure first appeared on Joggingvideo.com.

]]>The post A Portless iPhone? Please, Apple, Don’t Go There first appeared on Joggingvideo.com.

]]>This story is part of Focal Point iPhone 2022, CNET’s collection of news, tips and advice around Apple’s most popular product.

Apple evicted the 3.5mm headphone jack from iPhones starting in 2016. This year, it dropped the SIM card slot, relying on eSIM chips for its iPhone 14 smartphones. If you extrapolate, you might expect iPhones to drop the charging and data port next, beginning the era of the portless iPhone.

I sure hope not.

I’m all for progress, but I think it’s best that we keep some of those copper cables in our lives — even though that runs counter to the idea of a sleek and seamless gadget that Apple aspires to and that’s now becoming feasible, as CNET senior editor Lisa Eadicicco points out.

But hear me out. There are three big problems with a portless iPhone: charging inconvenience, slow data transfer and the rejection of wired earbuds. Here’s a look at the situation.

Charging inconvenience

The first big problem with a portless iPhone is that it would be harder to charge.

You may well have charging pads in the kitchen, in the office, in your car, and perhaps even on the nightstand by your bed. You need to charge your phone elsewhere, though: at the airport, in a rental car, at your friend’s house, in a college lecture hall, at a conference. Hauling around the necessary charger and cable for your “wireless” charging is even worse than carrying an ordinary wired charger.

Sure, some venues now have them built in, including coffee shops and airports, but you don’t want to roll the dice on availability. Chances are good, you’d lose.

Apple’s Newest Releases

- iPhone 14 Pro, Pro Max Review: Welcome to Apple’s Dynamic Island

- iPhone 14 Review: A Good Upgrade for Most People

- Apple Watch Series 8 Review: Improvement By Degrees

- Apple Watch SE Review: Almost Everything I Want

- Apple iOS 16 Released: New iPhone Features From the Update

Wireless chargers also are more expensive, often bulkier and can be finicky about phone placement, even with Apple’s MagSafe technology to align your phone better. On several occasions I’ve woken up in the morning or driven for hours and discovered that wireless charging didn’t work.

Wired charging also is faster, wastes less energy and doesn’t leave my phone piping hot.

If Apple ever dumps its now archaic Lightning port and embraces USB-C port on iPhones, as I expect it will, its charging and data port becomes more useful. I already use USB-C to charge my MacBook Pro, iPad Pro, Framework laptop, Sony noise-canceling earphones, Pixel 6 Pro phone, Pixel Buds Pro earbud case, and Nintendo Switch game console and controllers. When I’m traveling, I always have a USB-C charger with me, and I expect USB-C ports to become more common in airports, planes, hotels, cars and cafes. Don’t hold your breath for a wireless charging pad jammed into an economy class seat.

“There is no question that USB-C is long overdue on an iPhone especially given it is on iPad and Mac,” Creative Strategies analyst Carolina Milanesi said. “It is not always possible to do wireless or MagSafe.”

Data transfer speed

The convenience of wireless data transfer makes it the norm for phones. Gone are the days when we needed to plug our phones into our laptops to synchronize and back up data.

But if you’re one of those creative types Apple shows in every iPhone launch event, shooting 4K video for your indie movie, you’ll appreciate wired data transfer to get that video onto your laptop faster. That’s especially true if you’re shooting with Apple’s ProRes video.

A 1-minute ProRes clip I shot recently is 210MB; imagine how fast you’ll plow through the gigabytes if you’re shooting more seriously. Wired connections can be nice for transferring lots of photos, too, using a tool like Apple’s Image Capture utility or Adobe’s Lightroom photo editing and cataloging software.

Wired earbuds

I know, I know, AirPods or some other wireless earbuds these days are a booming business. But wired headphones remain useful. They’re even a retro fashion statement for some.

I like them because they don’t run out of battery power or suffer from Bluetooth’s flakiness. And they’re way harder to misplace or drop down a street gutter while you’re running to catch the bus.

Wired earbuds are way cheaper. Maybe you can afford $249 second-generation AirPods Pro, but not everyone can. The 3.5mm audio jack is being squeezed off smartphones, but iPhones with USB-C ports would mean it was more likely you could grab a cheap set of earbuds in the airport travel store if you forgot your AirPods.

I’d rather have a USB-C port than this Apple Lightning port on my iPhone, but I’d prefer either compared to no port at all.

Stephen Shankand/CNET

Perhaps there’s room for compromise — one iPhone for the wireless-only crowd and another model for people like me. But Apple doesn’t like to stick consumers with confusing choices, so I’d be surprised.

The case for portless iPhones

There are, of course, some significant advantages we’d get from a portless iPhone.

It would bring a new level of sleekness and reduce the amount of cable fiddling in your life. iPhone cases would be stronger and more impervious to water and dust. Apple would get a little extra interior space it could fill with a bigger battery or other electronics.

“A portless iPhone is probably more structurally rigid and allows for more room for the Taptic Engine or speakers or maybe an antenna,” said Anshel Sag, an analyst at Moor Insights & Strategy.

Apple, which typically doesn’t discuss its future plans, didn’t comment for this story.

Advances in wireless charging and data transfer technologies make a portless iPhone conceivable. More advances are likely, too: better Wi-Fi. Wireless charging that works anywhere in a room, not just on a charging pad. The potential use of ultra wideband positioning technology for fast short-range data transfer, too.

I already enjoy today’s wireless technologies that would make a portless iPhone possible. I just think the drawbacks of relying on them exclusively outweigh the advantages.

The best future is one that keeps that charging and data port. So, Apple, please don’t ditch it, too. And while your engineers are looking at the subject, how about USB-C?

The post A Portless iPhone? Please, Apple, Don’t Go There first appeared on Joggingvideo.com.

]]>The post Why the iPhone 14 Pro’s 48MP Camera Deserves the Attention first appeared on Joggingvideo.com.

]]>This story is part of Focal Point iPhone 2022, CNET’s collection of news, tips and advice around Apple’s most popular product.

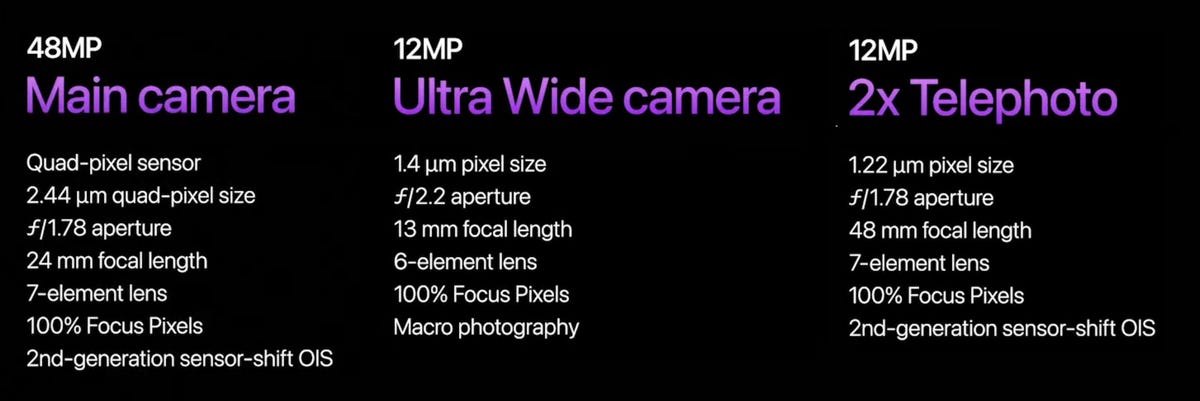

Apple’s iPhone 14 and iPhone 14 Plus smartphones get better main and selfie cameras, but if you’re a serious smartphone photographer, you should concentrate on the iPhone 14 Pro and Pro Max announced Wednesday. These higher-end models have a 48-megapixel main camera designed to capture more detail and, in effect, add a whole new telephoto lens.

The $999 iPhone 14 Pro and $1,099 iPhone 14 Pro Max start with a better hardware foundation. Their main camera’s image sensor is 65% larger than last year’s, a boost that helps double its low-light performance, said Victor Silva, an iPhone product manager. Low-light performance, a critical shortcoming in smartphone cameras, triples on the ultrawide angle camera and doubles on the telephoto.

But it’s the 48-megapixel sensor that deserves the most attention. It can be used two ways. First, the central 12 megapixels of the image can act as a 2x zoom telephoto camera by cropping out the outer portion of the image. Second, when shooting in Apple’s more advanced ProRaw format, you can take 48-megapixel images. That’s good for taking big landscape photos with lots of detail or for giving yourself more flexibility to crop a photo without losing too much resolution.

Cameras are one of the most noticeable changes in smartphone models from one year to the next, especially since engineers have embraced thicker, protruding lenses as a signature design element. Customers who might not notice a faster processor do notice the arrival of new camera modules, like the ultrawide angle and telephoto options that now are common on high-end phones.

Apple unveiled the new camera technology at its fall product launch event, a major moment on the annual technology calendar. The iPhone itself is an enormous business, but it’s also a foundation of a huge technology ecosystem deeply embedded into millions of peoples’ lives, including services like iCloud and Apple Arcade and accessories like the new second-generation AirPods Pro and Apple Watch Series 8.

iPhone 14 Pro: See preorder options

See at Apple

Pixel binning comes to the iPhone

Apple has stuck with 12-megapixel main cameras since first using them in the iPhone 6S in 2015. The approach offered a reasonable balance of detail and low-light performance without breaking the bank or overtaxing the phone processors that handle image data. But rivals, most notably Samsung, have added image sensors with 48 megapixels and even 108 megapixels.

More pixels aren’t necessarily better. Increasing megapixel counts means decreasing the size of each pixel, and that can hurt image quality unless there’s lots of light.

But by joining 2×2 or 3×3 pixel groups together into a single virtual pixel, an approach called pixel binning, camera makers get more flexibility. When there’s abundant light, the camera can take a 48-megapixel image that lets you dive into the photo’s details better. But if it’s dim, the camera will use the virtual pixels to take a 12-megapixel shot that suffers less from image noise and other problems.

Apple’s Newest Releases

- iPhone 14 Pro, Pro Max Review: Welcome to Apple’s Dynamic Island

- iPhone 14 Review: A Good Upgrade for Most People

- Apple Watch Series 8 Review: Improvement By Degrees

- Apple Watch SE Review: Almost Everything I Want

- Apple iOS 16 Released: New iPhone Features From the Update

When shooting ordinary photos with the iPhone 14 Pro models, Apple will take 12-megapixel shots, whether with the ultrawide camera, the main wide-angle camera, the 3x telephoto camera or the new 2x telephoto mode that uses the middle of the main camera sensor. To get the full 48 megapixels, you’ll have to use Apple’s ProRaw mode, which offers more detail and editing flexibility but requires some manual labor to convert into a conveniently shareable JPEG or HEIC image.

“You can now shoot ProRaw at 48-megapixel resolution, taking advantage of every pixel in the main camera,” Silva said. “It’s unbelievable how much we can zoom in.”

This chart shows details of the iPhone 14 Pro’s new main and ultrawide cameras. To the right are details for the main camera when shooting in 2x telephoto mode. Note that the effective pixel size is smaller in 2x mode than 1x mode, which means worse image quality. Apple didn’t release details on the 3x telephoto camera.

Apple; composite by Stephen Shankland/CNET

The 2x telephoto option uses the 12 million relatively small pixels in the center of the 48-megapixel main camera sensor. That will mean worse image quality than shooting with the full 48 megapixels. But Apple, which increased that sensor size considerably compared with last year, says even those pixels are still bigger than on previous iPhone 2x telephoto cameras.

“We can go beyond the three fixed lenses of the pro camera system,” Silva said. The 2x mode can shoot 4K video, too. Although its pixels are a quarter the area of the main camera in 12-megapixel mode, the 2x mode still gets the main camera’s relatively wide f1.78 aperture lens for better light gathering abilities than many smartphone telephoto cameras.

Other iPhone 14 Pro camera changes

Among other changes coming with the new phones:

- The main camera on the iPhone 14 Pro models now has a 24mm equivalent focal length, a bit wider than the 26mm lens Apple has used for years. That’ll accommodate group shots and indoor scenes better, where photographers are more likely to benefit from the main camera’s better low-light performance, but it’ll mean you’ll have to get closer to portrait subjects if you want to fill the frame.

- The ultrawide camera is sharper, improving the close-up macro photos, Silva said.

- Apple updated its camera’s flash with a nine-LED system that controls the pattern and intensity of light to accommodate the cameras’ different fields of view. It’s twice as bright in some conditions.

In Apple’s presentation, it didn’t shine the spotlight on the 3x telephoto camera, a focal length unchanged from the iPhone 13 Pro to the iPhone 14 Pro. In its press release, it said the camera is “improved” but didn’t share further details. Apple didn’t respond to a request for comment.

Anshel Sag, an analyst at Moor Insights & Strategy, would like to see Apple go further, like Samsung has done with its 10x telephoto camera on its Galaxy S22 Ultra. “I love the 10x,” Sag said. “I use it all the time.”

Meet Apple’s Photonic Engine

Much of the improvement in smartphone photography relies on changes that are less visible. Faster processors, including graphics processing units, image processors and AI accelerators, are critical to new computational photography software that’s core to the smartphone photography revolution. In Apple’s new iPhone 14 models, it calls its latest processing system the Photonic Engine.

This technology is an advance over Apple’s previous Deep Fusion technology for merging multiple frames into one shot, preserving detail and texture when lighting is modest or dim. With the Photonic Engine, Deep Fusion begins earlier in the image processing pipeline, working on the raw image data to better preserve detail and color, said Caron Thor, Apple’s senior manager of camera image quality.

Video improvements

All iPhone 14 models get a new action mode that can be toggled on for better stabilization when you’re running around with your camera. It’s not yet clear whether the feature crops the image more tightly, a common consequence when gathering imagery from only the central portion of the video, which remains more consistently in view.

The iPhone 14 Pro cameras also add 4K support to the Cinematic Mode that Apple debuted with the iPhone 13. That mode artificially blurs background parts of the video to focus on the main subject. If a new person enters the frame, the mode can switch focus accordingly.

The iPhone 14 Pro cameras also include upgraded image stabilization that should improve photos and videos.

iPhone 14 and 14 Plus camera upgrades

Apple’s lower-end iPhone 14 and iPhone 14 Plus get a new main camera that gathers 49% more light, with a larger sensor and a wider f1.5 aperture so its lens can let in more light, Thor said.

The Photonic Engine technology improves low-light photography on all the new phones’ cameras, though. Low-light performance doubles on the selfie front camera and ultrawide angle back camera, and it improves by a factor of 2.5 with the main camera, she said.

The new selfie camera on the iPhone 14 and 14 Plus has an f1.9 aperture that boosts light gathering by 38% compared with the iPhone 13. And for the first time, it also has autofocus to avoid blurry faces.

The post Why the iPhone 14 Pro’s 48MP Camera Deserves the Attention first appeared on Joggingvideo.com.

]]>The post iPhone 14 Pro’s A16 Chip: Don’t Expect a Major Speed Boost first appeared on Joggingvideo.com.

]]>This story is part of Focal Point iPhone 2022, CNET’s collection of news, tips and advice around Apple’s most popular product.

Apple on Wednesday unveiled the A16 Bionic at its launch event for the iPhone 14, touting the 16 billion transistor processor as the highest performing ever for smartphones. But Apple didn’t detail how much faster it is than last year’s A15, and it’s not likely to major a big speed boost.

The A16 Bionic, built on a 4-nanometer process, sports a faster design with six CPU cores — the same combination of two powerful and four efficient cores Apple has used for years. Apple didn’t say how much faster the A16’s high-performance cores are compared with those on the A15, but they use 20% less power, which helps battery life. For the four efficiency cores, Apple didn’t offer any comparisons to the A15.

“I think the A16 is very incremental over the A15,” said Anshel Sag, an analyst at Moor Insights & Strategy. Instead of processor speed, Apple “focused explicitly on camera performance and battery life.”

Without big manufacturing process improvements that shrink transistors, the core data processing circuitry on a chip, designers have a harder time adding features that increase the transistor total. That includes upgrades like more graphics processing units and accelerators for increasingly important artificial intelligence software.

With the A16, Apple’s transistor count increased only modestly from 15 billion transistors on the A15. The A16 has “nearly 16 billion transistors,” Apple marketing chief Greg Joswiak said. The A14 has 11.8 billion transistors, and the A13 has 8.5 billion transistors.

You may not notice new chip performance directly, but it’s key to delivering new abilities, like the iPhone 14 Pro’s advanced photo and video processing. The act of taking a photo exercises the A16’s CPU, GPU, image processor and neural engine, cranking out up to 4 trillion operations per photo to deliver an image.

Unlike with previous iPhone generations, the A16 will only be available in Apple’s higher-end iPhone 14 Pro and Pro Max models. The more mainstream iPhone 14 and 14 Plus will make do with the A15 chip that Apple debuted in 2021.

Apple didn’t immediately respond to a request for comment.

A reported Geekbench test result shows modest performance gains overall. In single threaded tasks, which depend on the speed of an individual CPU core to do one important job, performance rose a significant 10% from a score of 1,707 on the iPhone 13 Pro to 1,879 on the iPhone 14 Pro, according to a test result spotted by MacRumors. But on multithreaded tasks, where a computing device is doing lots of jobs at the same time and uses more CPU cores, the score was virtually unchanged, rising from 4,659 to 4,664.

Geekbench doesn’t reflect the better battery life that more efficient processors enable, however. And the iPhone scores remain head and shoulders above those of Android rivals.

Apple’s Newest Releases

- iPhone 14 Pro, Pro Max Review: Welcome to Apple’s Dynamic Island

- iPhone 14 Review: A Good Upgrade for Most People

- Apple Watch Series 8 Review: Improvement By Degrees

- Apple Watch SE Review: Almost Everything I Want

- Apple iOS 16 Released: New iPhone Features From the Update

The A16’s 16-core neural engine, designed to speed up AI work like computational photography or processing voice commands, can perform “nearly” 17 trillion operations per second, up from 15.8 trillion on the A15. The five-core GPU has 50% more memory bandwidth to better support graphic-intense games. The A16’s new display engine allows for a 1Hz refresh rate for lower battery consumption, the always on display, higher peak brightness and smoother antialiasing for blending graphics.

The new chip is important both to Apple’s ambitions and to iPhone owners. Apple’s iPhone chips have maintained a significant performance lead over rivals, helping to ensure iPhones are fast not just for its customers but also for the developers who are crucial to bringing apps to the phones. The A-series line is now the foundation for Apple’s M-series processors used to power its Macs.

Now playing:

Watch this:

Apple’s iPhone 14 Pro, Pro Max Dynamic Island Explained

3:49

Apple unveiled the new processor at its iPhone 14 launch event, a major moment on the annual technology calendar. The iPhone itself is an enormous business for Apple, but it’s also a foundation of a huge technology ecosystem deeply embedded into millions of peoples’ lives, including services like iCloud and Apple Arcade and accessories like AirPods and Apple Watches.

Still, the A16 also shows how hard it’s become to make progress in the semiconductor business. Two years ago, Apple’s A14 was early to the latest chipmaking technology, the 5-nanometer process from chip foundry Taiwan Semiconductor Manufacturing Co.

The A15 used the 5nm process too. And this year, the A16 uses a 4nm process — not TSMC’s newer 3nm process. The 3nm process offers better performance and miniaturizes circuitry so that more features can be crammed onto a processor, but is only now entering mass production, too late for the latest iPhone.

Qualcomm, the top chipmaker for the Android smartphones, acquired startup Nuvia in an attempt to give its processors a big speed boost. However, chip design firm Arm, which licenses processor technology to Apple, Qualcomm and many others, sued Qualcomm in August, saying Qualcomm tried to transfer Nuvia’s technology licenses without Arm’s consent.

The post iPhone 14 Pro’s A16 Chip: Don’t Expect a Major Speed Boost first appeared on Joggingvideo.com.

]]>The post Here’s How Adobe’s Camera App for Serious Photographers Is Different first appeared on Joggingvideo.com.

]]>

Adobe is working on a camera app designed to take your smartphone photography to the next level.

Within the next year or two, the company plans to release an app that marries the computing smarts of modern phones with the creative controls that serious photographers often desire, said Marc Levoy, who joined Adobe two years ago as a vice president to help spearhead the effort.

Levoy has impeccable credentials: He previously was a Stanford University researcher who coined the term computational photography and helped lead Google’s respected Pixel camera app team.

“What I did at Google was to democratize good photography,” Levoy said in an exclusive interview. “What I’d like to do at Adobe is to democratize creative photography, where there’s more of a conversation between the photographer and the camera.”

If successful, the app could extend photography’s smartphone revolution beyond the mainstream abilities that are the focus of companies like Apple, Google and Samsung. Computational photography has worked wonders in improving the image quality of small, physically limited smartphone cameras. And it’s unlocked features like panorama stitching, portrait mode to blur backgrounds and night modes for better quality at night.

Camera app ‘dialogue’ with the photographer

Adobe isn’t making an app for everyone, but instead for people willing to put in a bit more effort up front to get the photo they want, something matched to the enthusiasts and pros who often already are customers of Adobe’s Photoshop and Lightroom photography software. Such photographers are more likely to have experience fiddling with traditional camera settings like autofocus, shutter speed, color, focal length and aperture.

Several camera apps, like Open Camera for Android and Halide for iPhones, offer manual controls similar to those on traditional cameras. Adobe itself has some of those in its own camera app, built into its Lightroom mobile app. But with its new camera app, Adobe is headed in a different direction — more of a “dialogue” between the photographer and the camera app when taking a photo to get the desired shot.

Adobe is aiming for “photographers who want to think a little bit more intently about the photograph that they’re taking and are willing to interact a bit more with the camera while they’re taking it,” Levoy said. “That just opens up a lot of possibilities. That’s something I’ve always wanted to do and something that I can do at Adobe.”

In contrast, Google and its smartphone competitors don’t want to confuse their more mainstream audience. “Every time I would propose a feature that would require more than a single button press, they would say, ‘Let’s focus on the consumer and the single button press,'” Levoy said.

Adobe camera app features and ideas

Levoy won’t yet be pinned down on his app’s features, though he did say Adobe is working on a feature to remove distracting reflections from photos taken through windows. Adobe’s approach adds new artificial intelligence methods to the challenge, he said.

“I would love to be able to remove window reflections,” Levoy said. “I would like to ship that, because it ruins a lot of my photographs.”

But there are plenty of areas where Levoy expects improvements:

- “Relighting” an image to get rid of problems like harsh shadows on faces. The iPhone’s lidar sensor or other ways of building a 3D “depth map” of the scene can help inform the app where to make such scene illumination decisions.

- A new approach to “superresolution,” the computational generation of new pixels to try to offer higher-resolution photos or more detail when digitally zooming. Google’s Super Res Zoom combines multiple shots to this end, as does Adobe’s AI-based image enhancement tool, but both the multiframe and AI approaches could be melded, Levoy said. “Adobe is working on improving it, and I’m working with the people who wrote that,” he said.

- Merging several shots into one digital photo montage with the best elements of each photo, for example, making sure everybody is smiling and nobody is blinking in a group shot. It’s difficult technology to get working reliably: “Google launched it in Google Photos a long time ago. Of course we de-launched it after people started posting all kinds of horrible creations,” Levoy said.

- New camera sensors. Photographers have long appreciated polarizing filters for their ability to cut glare and reflections, and Sony makes a polarized light sensor that could be useful in phones, Levoy said. It wouldn’t filter the whole image, but instead provide scene detail to make for smarter processing, like reducing reflections from a person’s sweaty face.

- The methods of computational video — applying the same tricks to video as are now common with photos — “has barely been scratched,” Levoy said. For example, he’d like to see an equivalent of the Google Pixel Magic Eraser feature to remove distractions from videos, too. Video is only getting more important, as the rise of TikTok illustrates, he said.

- Photos that adapt to the screens where people see them. People naturally prefer more contrast and richer colors when seeing photos on small phone screens, but that same photo on a laptop or TV can look garish. Adobe’s DNG file format could allow viewer-based tweaks to dial such adjustments up or down to suit their presentation, Levoy said.

- A mixture of real images and synthetic images like those generated by OpenAI’s DALL-E AI system, a technology Levoy calls “amazing.” Adobe has a strong interest in creativity, and AI-generated images could be prompted not just with text but with your own photos, he said.

- Multispectral image sensors, which gather ultraviolet and infrared light beyond human vision, could provide data to improve the colors we can see, for example figuring out whether an object is blue or whether it’s actually white but looks blue because it’s shadowed.

Pro photographers can be picky

Adobe’s success isn’t guaranteed. A more discriminating market of serious photographers are less likely to be forgiving about computational photography glitches that can show up when performing actions like merging multiple frames into one or artificially blurring backgrounds, for example.

At the same time, mainstream camera apps that ship with phones have steadily improved, adding features like computational raw image formats for more editing flexibility. And Adobe doesn’t get quite the deep level of access to camera hardware that a phone maker does, raising performance challenges.

Another concern: Smartphone cameras and processing capabilities vary widely. Plenty of computational photography tricks only work on the most powerful new phones, and it’s hard to write software that copes with the bewilderingly broad range of hardware options.

But Levoy, who’s seen what computational photography already has delivered despite those challenges, clearly is enthralled.

“It’s just getting exciting,” Levoy said. “We haven’t come anywhere near the end of this road.”

The post Here’s How Adobe’s Camera App for Serious Photographers Is Different first appeared on Joggingvideo.com.

]]>The post You Now Can Tell DALL first appeared on Joggingvideo.com.

]]>

DALL-E, OpenAI’s online service that uses artificial intelligence to generate images from text you type in, now can make bigger images for more creative noodling.

When DALL-E first arrived in April, it could turn a text prompt like “portrait of a blue alien that is singing opera,” “3D rendering of a bouldering wall made of Swiss cheese” or “steampunk elephant” into images measuring 1024×1024 pixels. On Wednesday, the company added a new feature called outpainting that lets you extend the borders of the image. The expanded image is based on the text prompt and the existing imagery, said OpenAI engineer David Schnurr.

DALL-E users “wanted different aspect ratios or just wanted to be able to like take a concept that was produced and expand it into a larger image,” Schnurr said. Processing power limits means DALL-E can only expand existing imagery, not generate a higher-resolution image to start with, he added.

DALL-E, whose name is a mashup of Pixar’s WALL-E robot and surrealist painter Salvador Dalí, is a remarkable illustration of what’s possible with AI technology today. OpenAI trained its system on 650 million images, each labeled with text. It’s able to blend elements to create interpretations of your text prompt.

The service is free for generating up to 60 images per month, but you have to sign up and get through a waiting list to use it. More than 1 million people have signed up for DALL-E, said product manager Joanne Jang.

Judging by all the DALL-E tweets, people enjoy noodling around with the AI system to create fanciful images. But there are serious uses, too, like creating storyboards for movies, illustrating children’s books and exploring concept art for videogames, Jang said.

The post You Now Can Tell DALL first appeared on Joggingvideo.com.

]]>The post AMD’s Ryzen 7000 Gives High first appeared on Joggingvideo.com.

]]>

What’s happening

AMD will begin shipping its Ryzen 7000 family of desktop processors, bringing a 29% speed boost to PCs favored by gamers and creative types like video editors and animators.

Why it matters

The new model, packaging three “chiplets” into one processor, keeps the pressure on Intel to so high-end PCs should get more powerful without massive price increases.

What’s next

AMD is working on a more powerful Ryzen 7000 model with higher performance using the company’s 3D V-Cache technology.

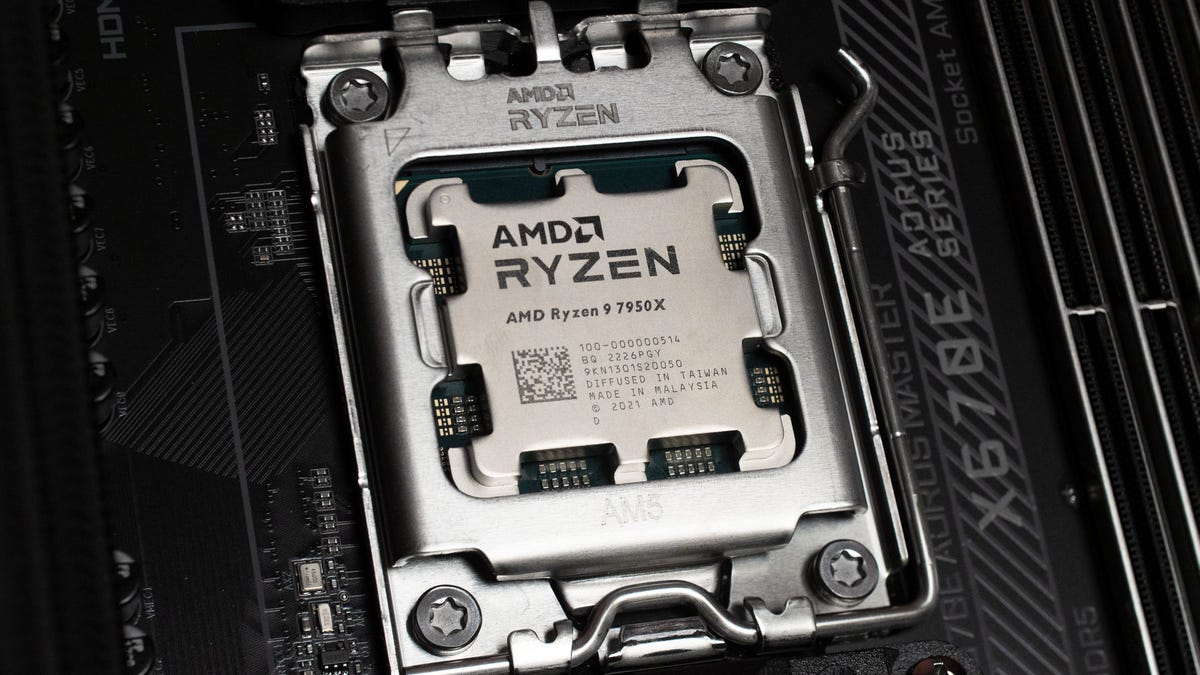

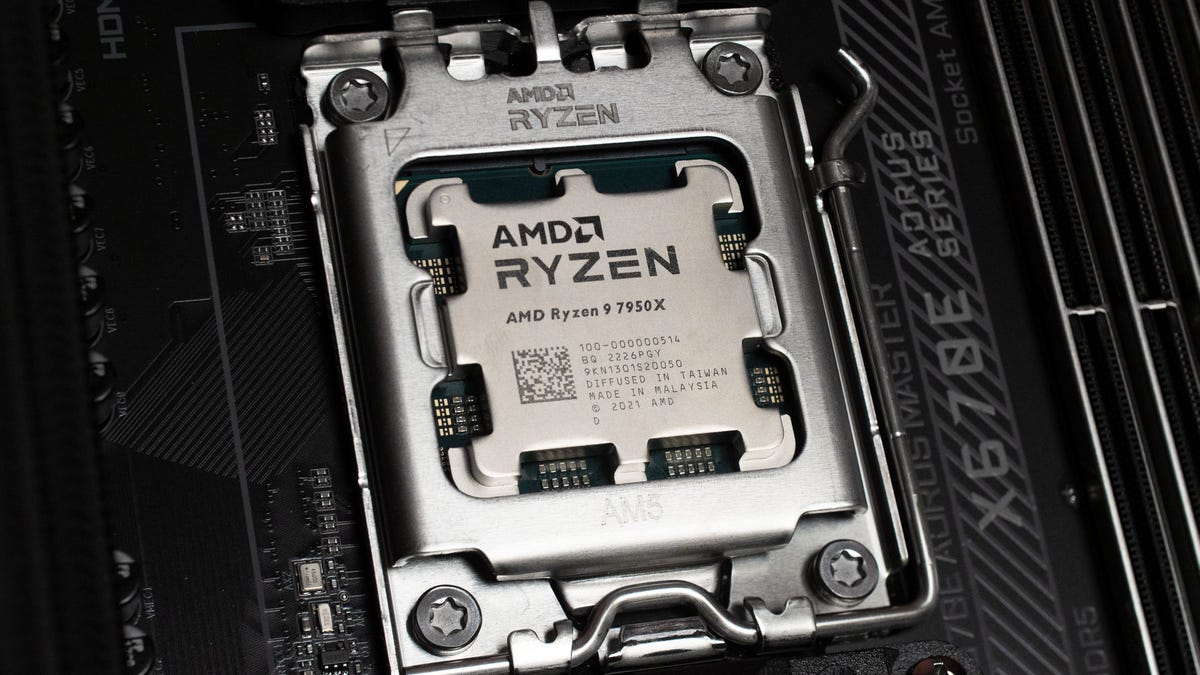

AMD on Monday revealed its Ryzen 7000 series of processors for desktop PCs, promising a 29% speed boost over the Ryzen 5000 line it began selling in 2020. The new models, which go on sale Sept. 27, are good news for gamers, video editors and anyone else who demands top performance.

The 29% speedup shows when running a single, important task. When measuring the performance of multitasking jobs that can span the top-end version of the processor’s 16 total processing cores, the performance boost is 49%, Chief Technology Officer Mark Papermaster said in an exclusive interview. If you’re happy with the same performance as a last-generation Ryzen 5000, the Ryzen 7000 line matches it while using 62% less power, he said.

The most expensive model, the Ryzen 9 7950X, costs $699 — $100 cheaper than the Ryzen 9 5950X at its 2020 launch during the earlier days of the pandemic. AMD also offers $549 7900X, $399 7700X and $299 7600X models that run at slower clock speeds and don’t have as many of the new Zen 4 processing cores. AMD also will continue selling its 2-year-old 5000 products in lower priced machines.

For anyone in the market for a high-end machine, it’s good news. AMD has been carving away sales from Intel, and the new models will keep the pressure on its rival. And it could reduce the temptations some Windows PC users might feel to switch to Macs with Apple’s efficient M1 and M2 processors.

“AMD is giving the gaming and content creation crowd exactly what it’s asking for — better performance or lower power at the same price,” said Patrick Moorhead, an analyst at Moor Insights & Strategy.

Some of the credit for the speed boost goes to Taiwan Semiconductor Manufacturing Co., which builds the AMD designs on a newer 5nm line that’s faster and more efficient with electrical power, helping to push the top clock speed of the chip up 800MHz to a peak of 5.7GHz, Papermaster said. Also deserving is the Zen 4 technology, which churns through 13% more programming instructions for each tick of the chip’s click than Zen 3.

New chip packaging techniques

More broadly, though, AMD has benefited from the “chiplet” approach it began with the first-generation Zen design in 2017, packaging multiple smaller processing elements into a single, larger processor.

Such chip packaging technology is moving to the forefront of processor innovation, as evidenced by Apple’s M1 Ultra, which joins two M1 Max chips into a single larger processor, and Intel’s 2023 Meteor Lake processor, which includes four separate processing tiles, three built by TSMC.

AMD Ryzen 7000 prices range from $299 to $699.

AMD

The Ryzen 9 5950X includes two chiplets, each with eight Zen 4 cores, and one chiplet for input-output tasks like communicating with memory. AMD will marry more of these eight-core chiplets for server processors it’ll sell to data center customers later this year.

“With a desktop, you’re going from eight cores to 16 cores,” Papermaster said. “Think of a server going all the way up through 64 cores and many more than that in the server we’re going to announce this fall.”

Mobile versions of Zen 4-based processors are scheduled to arrive in laptops in 2023. AMD also plans a compact Zen 4C variation for cloud computing work in data centers that’ll offer up to 128 processing cores in the first quarter of 2023. It sacrifices some clock speed for the ability to run lots of independent jobs in parallel.

Zen 4 based machines also benefit from other speed boosts:

- Faster interfaces to the rest of the computer, supporting DDR5 memory and PCI Express 5.0 links to devices like storage and graphics cards

- The new AM5 socket to plug into circuit boards, which the company will support through at least 2025 to ease upgrades for PC makers and customers

- The ability to process AVX-512 instructions, which should speed up some software like image editors that employ artificial intelligence methods

A third dimension in chiplet packaging

AMD relies chiefly on a relatively straightforward side-by-side packaging approach for its mainstream chips. But it’s added a more sophisticated third dimension to its packaging options, stacking high-speed cache memory on top of the processing cores. It began this approach, called 3D V-Cache, with a rarified top-end option for the earlier Zen 3 processors. 3D V-Cache models are on the way for the Zen 4 generation, too, though Papermaster wouldn’t say when they’ll arrive.

Packaging flexibility has been crucial to AMD. For example, TSMC builds the Zen 4 processing chiplets on its latest 5nm manufacturing process but uses the cheaper, older 6nm process for the chiplet handling input-output functions.

The approach means AMD can spend money more judiciously, since the using the newest process raises the cost of a chip’s basic circuitry element, the transistor.

“The cost per transistor is going up, and it’s going to continue to go up in every generation,” Papermaster said. “That’s why chiplets have been so important.”

AMD’s Ryzen 9 7950X plugs into motherboards with a new AM5 socket, a more capable interface that AMD plans to use through at least 2025.

AMD

AMD will also use chiplets built with TSMC’s 5nm technology for its next generation RDNA3 graphics, the foundation of its upcoming Radeon graphics processors, CEO Lisa Su said during AMD’s Ryzen 7000 launch event Monday. Showing off a prototype, she said RDNA3 offers 50% better power efficiency, an important consideration for gamers trying to run software without overheating their PCs. The Ryzen 7000 processors have more basic RDNA2 graphics built in, useful for booting up machines and other basic tasks but expected to be supplemented by more powerful, separate graphics chips.

Don’t count Intel out

AMD has succeeded in part through its chiplet strategy, but it’s also benefited from Intel’s major difficulties advancing its manufacturing over the better part of a decade. That advantage might not last much longer.

Intel expects its own manufacturing technology to match rivals by 2024 and surpass them by 2025, in the view of Chief Executive Pat Gelsinger. And it’s been working for years on its own packaging technologies. Where AMD’s 3D V-Cache is a pricey rarity, Intel will stack chip elements in its mainstream 2023 Meteor Lake PC processor using a technology called Foveros.

“Intel has more diverse and technically advanced options” when it comes to chiplet packaging, Tirias Research analyst Kevin Krewell said.

Another Intel advantage is the combination of performance cores and efficiency cores, an approach cribbed from the smartphone market that better balances speed and battery life. That’s in Intel’s current processor, Alder Lake.

Intel declined to comment.

Could Intel build AMD chips?

If Intel succeeds in its current ambitions, it could one day be building AMD chiplets. That’s because Gelsinger launched a new foundry business which, like TSMC and Samsung, builds chips for others.

AMD once built its own processors but spun that off as the business now called GlobalFoundries. Papermaster wouldn’t comment directly on what it would take to sign on with Intel Foundry Services but said it requires trustworthy foundry partners with proven capability and a good working partnership.

“We would love to see more diversity in the foundry ecosystem,” Papermaster said.

The post AMD’s Ryzen 7000 Gives High first appeared on Joggingvideo.com.

]]>The post US Government: Stop Dickering and Prepare for Post first appeared on Joggingvideo.com.

]]>

If you’re wondering when your company should start taking seriously the security problems that quantum computers pose, the answer is now, the US government says.

“Do not wait until the quantum computers are in use by our adversaries to act,” the Cybersecurity and Infrastructure Security Agency said in a guide published Wednesday. “Early preparations will ensure a smooth migration to the post-quantum cryptography standard once it is available.”

Quantum computers, which take advantage of the weird physics of the ultrasmall to perform calculations, are a nascent technology today. Companies like IBM, Intel, Google and Microsoft have joined a host of startups in investing billions of dollars in their development, though. And as they become more powerful, quantum computers could crack conventional encryption, laying bare sensitive communications.

That’s why the US government’s National Institute of Standards and Technology embarked on a search for post-quantum cryptography technology that would protect sensitive information even from quantum computers. With submissions and analysis from experts around the world, NIST picked four post-quantum encryption algorithms and is working to standardize them by 2024.

Quantum computers also could undermine cryptocurrencies, which also use today’s cryptography technology.

The post US Government: Stop Dickering and Prepare for Post first appeared on Joggingvideo.com.

]]>